Wide & Deep Learning for Recommender Systems

Paper Summary

This blog post will summarize the paper Wide & Deep Learning for Recommender Systems

Introduction

Memorization involves acquiring knowledge about frequent co-occurrences of data features, while generalization pertains to the capability of making use of novel feature combinations not encountered during the training phase.

Generalized linear models (GLMs) incorporating cross-product transformations for feature interactions are commonly used due to their simplicity and interpretability. However, they exhibit limitations when it comes to generalizing to previously unseen cross-product transformations. An example of such a transformation is when we consider the condition AND(user_installed_app=netflix, impression_app=pandora), which evaluates to 1 when a user has Netflix installed and is shown Pandora.

On the other hand, deep neural networks can generalize to previously unobserved feature pairs by learning lower-dimensional embedding vectors. Nevertheless, these networks face challenges when dealing with sparse and high-rank query matrices (e.g., users with specific preferences).

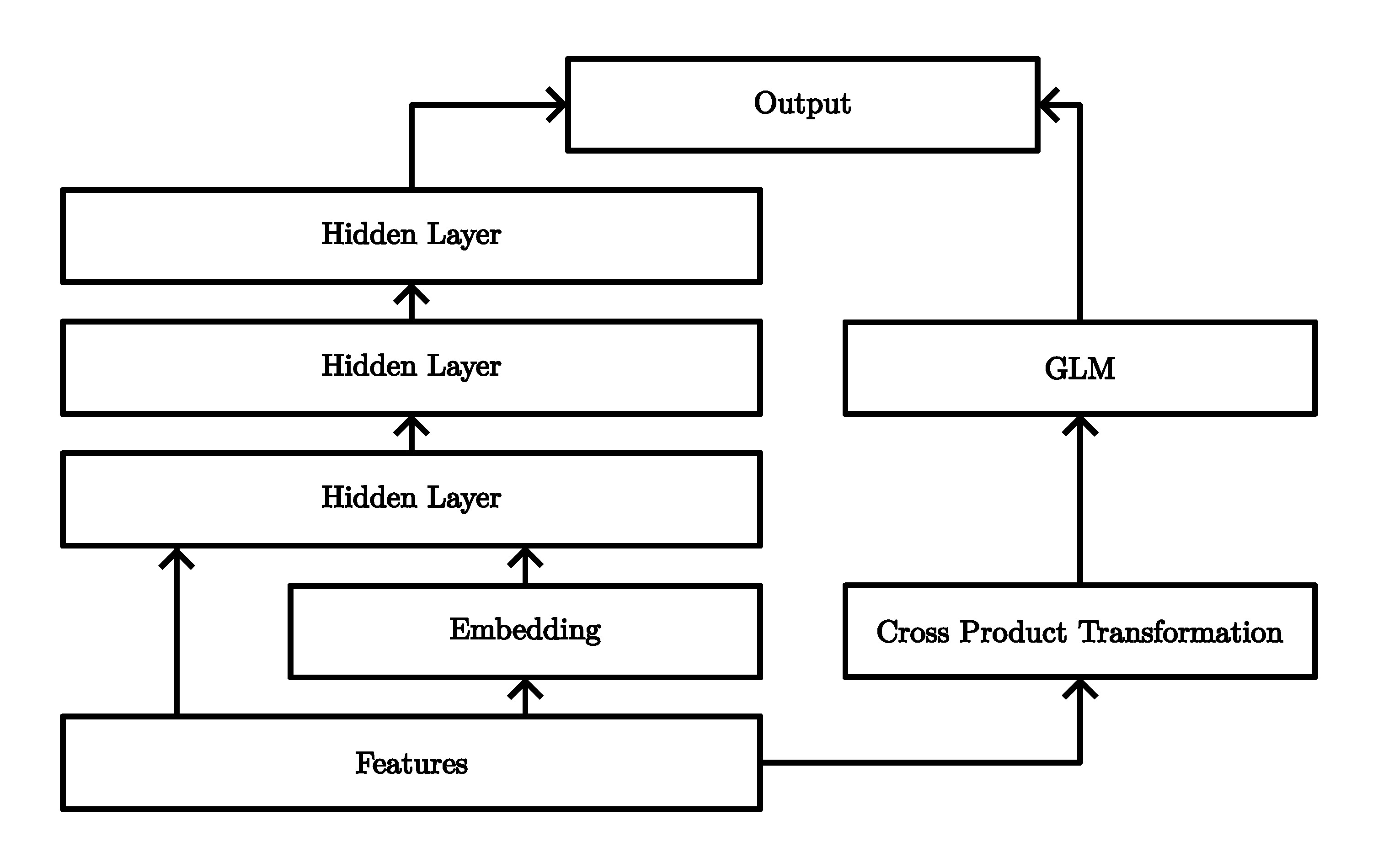

The authors of the paper introduce the Wide & Deep learning framework, which involves the simultaneous training of a deep neural network and a GLM for recommender systems (the summary focuses on the concept behind Wide & Deep learning rather than the specifics of recommender systems).

Wide & Deep Learning

Wide

The wide component of this framework comprises a GLM represented as

\[y = \mathbf{w}^\top \mathbf{x} + b\]where \(y\) represents the predicted value, \(\mathbf{x}\) denotes the input features, \(\mathbf{w}\) represents the model weights, and \(b\) is the bias term. Instead of directly feeding the features into the GLM, the authors employ cross-product transformations, defined as

\[\phi_k(\mathbf{x}) = \prod_{i=1}^d x_i^{c_{ki}}\]where \(c_{ki}\) is 1 if the \(i\)-th feature is a part of the \(k\)-th transformation and 0 otherwise. These cross-product transformations introduce non-linearity to the GLM.

Deep

The deep segment of the architecture constitutes a simple feed-forward neural network. Categorical features, expressed as strings, are transformed into dense vectors through an embedding process. These embedding vectors are fed into additional hidden layers and optimized as part of the entire model to minimize the final loss.

Joint Training

Both parts of the model are trained concurrently by backpropagating the gradients to update both the wide and deep components. In the recommender system experiment, a logistic loss function is employed, and the wide model is optimized using a Follow-the-regularized-leader (FTRL) algorithm with \(\ell_1\)-regularization, while the deep model employs AdaGrad. The figure below shows the structure of the Wide & Deep model architecture.

Experimental Results

The authors conducted online A/B testing for 3 weeks to measure acquisition gain. They selected 3 groups, each composed of randomly chosen users. The authors used a highly optimized GLM as a baseline. The Deep-only model exhibited a +2.9% increase in app acquisition, while the Wide & Deep model demonstrated a +3.9% improvement compared to the baseline.

The authors also report the Area Under Receiver Operator Characteristic Curve (AUC) on an offline holdout test set, where the Wide & Deep framework only has a marginal improvement compared to the baseline.

| Model | Online Acquisition Gain | Offline AUC |

|---|---|---|

| Wide | 0% | 0.726 |

| Deep | +2.9% | 0.722 |

| Wide & Deep | +3.9% | 0.728 |

Conclusion

The Wide & Deep learning framework introduced by the authors combines the strengths of both generalized linear models (GLMs) and deep neural networks to enhance recommender systems. By jointly training a deep neural network and a GLM, the framework combines the memorization capabilities of GLMs and the generalization capabilities of deep neural netoworks. The experimental results highlight that the Wide & Deep framework improves upon previously used models.